A couple of years ago, when we heard buzzwords like vibe coding or context engineering, we would look away. But these days, many articles and threads include these terms, but where are their origins?

Who mentioned them? And if you don’t know some of them. Trust me, I didn’t know many either. This article is here to fill that gap.

Next time you come across these terms, you’ll know their origin, meaning, and how they’ve evolved. Let’s start with the hottest one, vibe coding.

Vibe Coding

Source: Introduced by Andrej Karpathy

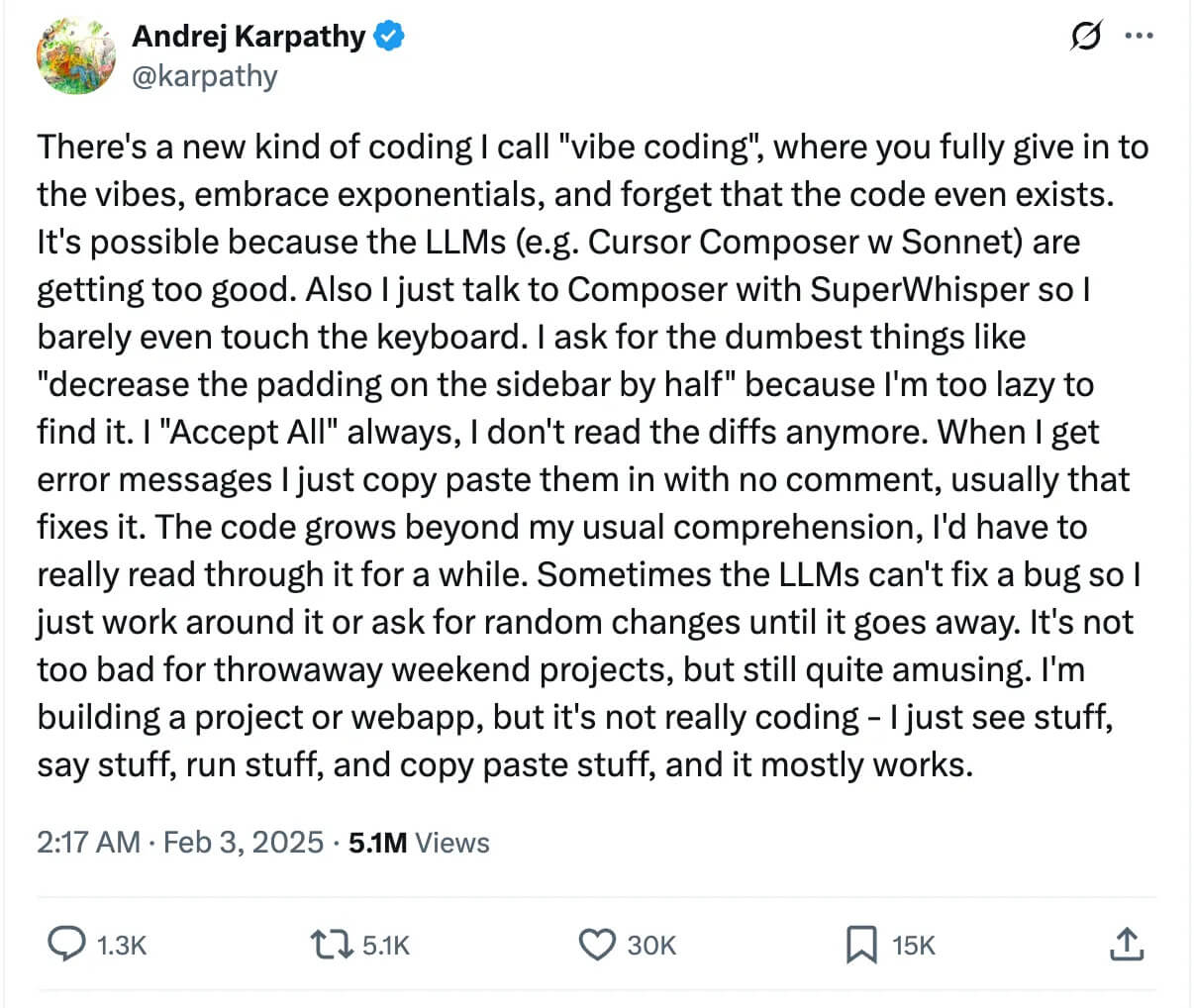

Vibe coding term was first introduced by Andrej on X: Reference

Vibe coding term was introduced by Andrey Karpathy. Andrej is the former Director of Tesla and was in the founding team of OpenAI.

Vibe Coding term describes a new kind of programming, like we did in Gemini CLI, Cursor, or more simply, using ChatGPT to write code.

As a LearnAIWithMe team, we have developed a website, by just vibe coding, which automates the AI-startup idea generation process, for instance. Before uploading to GitHub, we don’t even know where the files are.

Especially after seeing the rise of these no-code tools, this vibe-coing term fits what most developers do daily these days.

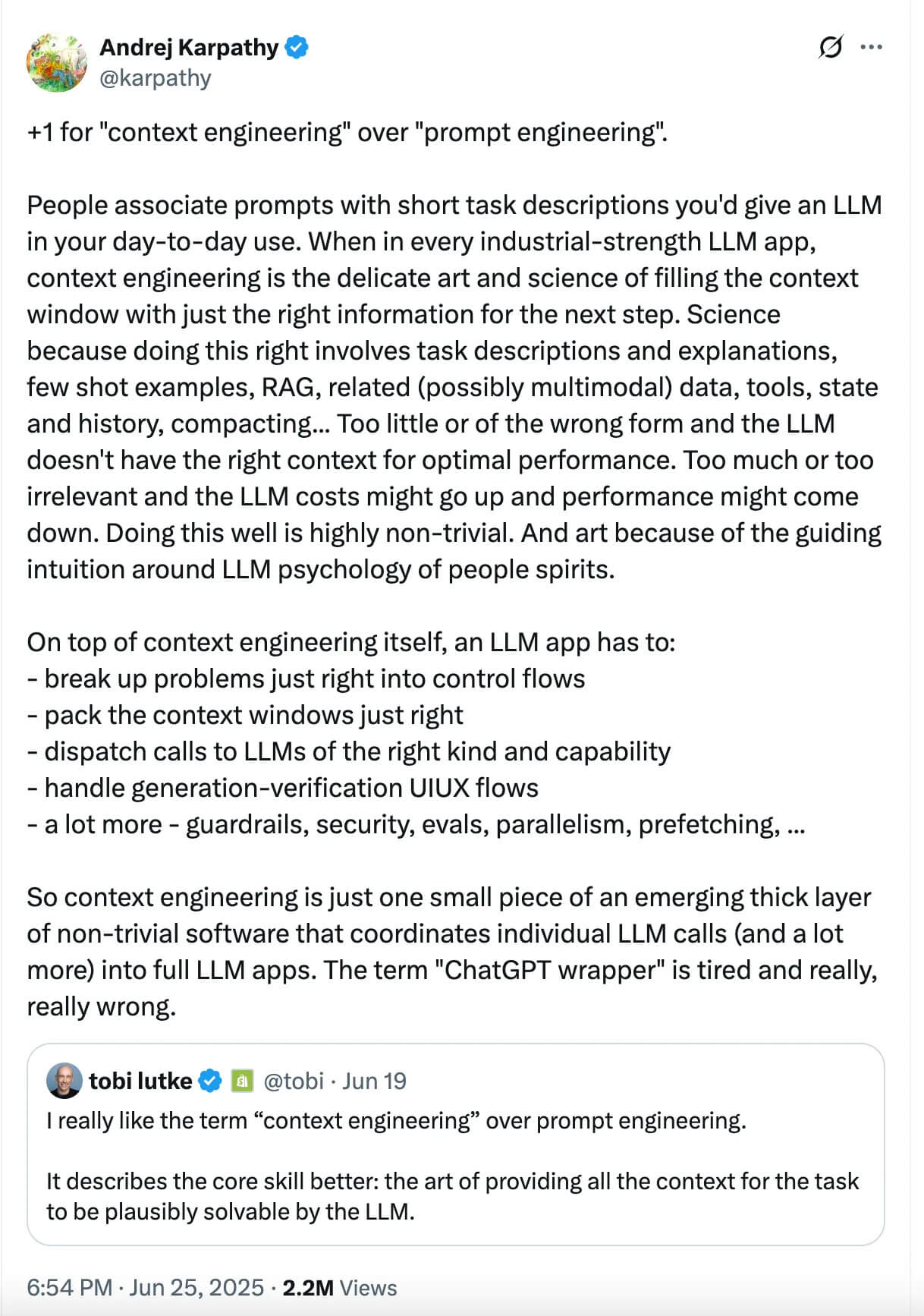

Context Engineering

Source: Tobi Lutke + Karpathy

Context engineering is like a prompt engineering, but more than that. Crafting prompts are a good skill now, but you should use the right context with them, because otherwise;

- Your API cost would be huge.

- Your results would be worse.

Even LLMs like GPT-4.1 have huge context windows, with over 1 million context windows, you still need to optimize your context to get better results.

Prompt Engineering

Source: Evolved by the AI community (2020–2022)

Photo by Jacob Mindak on Unsplash

Back in the early days of GPT-3, most of us were simply typing random sentences into the AI and hoping to crack the entire system. Then people realized that how you ask matters as much as what you ask.

That’s when prompt engineering was born. Nobody trademarked it, as I researched, but let me know in the comments if you know the exact source.

It emerged after ChatGPT dropped. Reddit threads, Medium posts, Discord chats, we shared tricks like: “These 3 Prompts Will Change How You Brainstorm Ideas Indefinitely!” or “Stop Asking ChatGPT the Wrong Way! 3 Techniques That 10x Your Answers!

It exploded.

Suddenly, being a prompt engineer was a LinkedIn headline, and, briefly, a six-figure job.

Constitutional AI

Source: Introduced by Anthropic (December 2022)

AI models behave because we told them to. But what if they could tell themselves what’s right and wrong? That’s the idea behind Constitutional AI, coined by Anthropic in late 2022. It is like the Terminator movie, right?

1. A robot may not harm a human being,

2. A robot must obey

3. A robot must protect its own existence

These rules originate from Isaac Asimov's three laws of robotics.

Turning back to the topic, instead of feeding the model thousands of labeled examples like “don’t say this,” Anthropic gave it a constitution, a fixed list of principles, and trained it to critique and revise its answers based on those rules.

It’s like teaching AI ethics by handing it the Constitution and saying, “Good luck.” Surprisingly, it worked.

And these days, Anthropic models work better than ChatGPT’s model(imo.)

Chain-of-Thought Prompting

Source: Jason Wei (Google Brain), January 2022

Photo by Miltiadis Fragkidis on Unsplash

“Let’s think step by step.” That phrase changed prompt engineering.

Jason Wei and the Google Brain team published Chain-of-Thought Prompting Elicits Reasoning in LLMs in early 2022. They discovered that LLMs perform way better on complex tasks when they see examples of step-by-step thinking before being asked to solve something.

This was the moment we stopped treating LLMs like Google Search and started talking with them and explaining them step by step.

Today, the “chain-of-thought” method is my go-to technique, especially when building AI agents for my clients, because it outperforms every other method I know.

If you combine this technique with RAG, you will receive “amazing” feedback, I assure you.

MCP (Model Context Protocol)

Source: Introduced by Anthropic (November 2024)

MCP, or Model Context Protocol, was introduced in late 2024, and they are like a plug-in ChatGPT released a couple of years ago. They enable LLM to communicate with tools, APIs, files, databases, and more. It will let you connect LLMs’ power and other tools’ capabilities.

The best part? It wasn’t just a whitepaper idea. Anthropic open-sourced it, and other labs like OpenAI and DeepMind quickly followed suit.

RAG (Retrieval-Augmented Generation)

Source: Introduced by Facebook AI Research (2020)

Before RAG, LLMs could only guess based on their training data. If you have been using ChatGPT for a couple of years, you might remember when it didn't have web access; it was good, but not the best times.

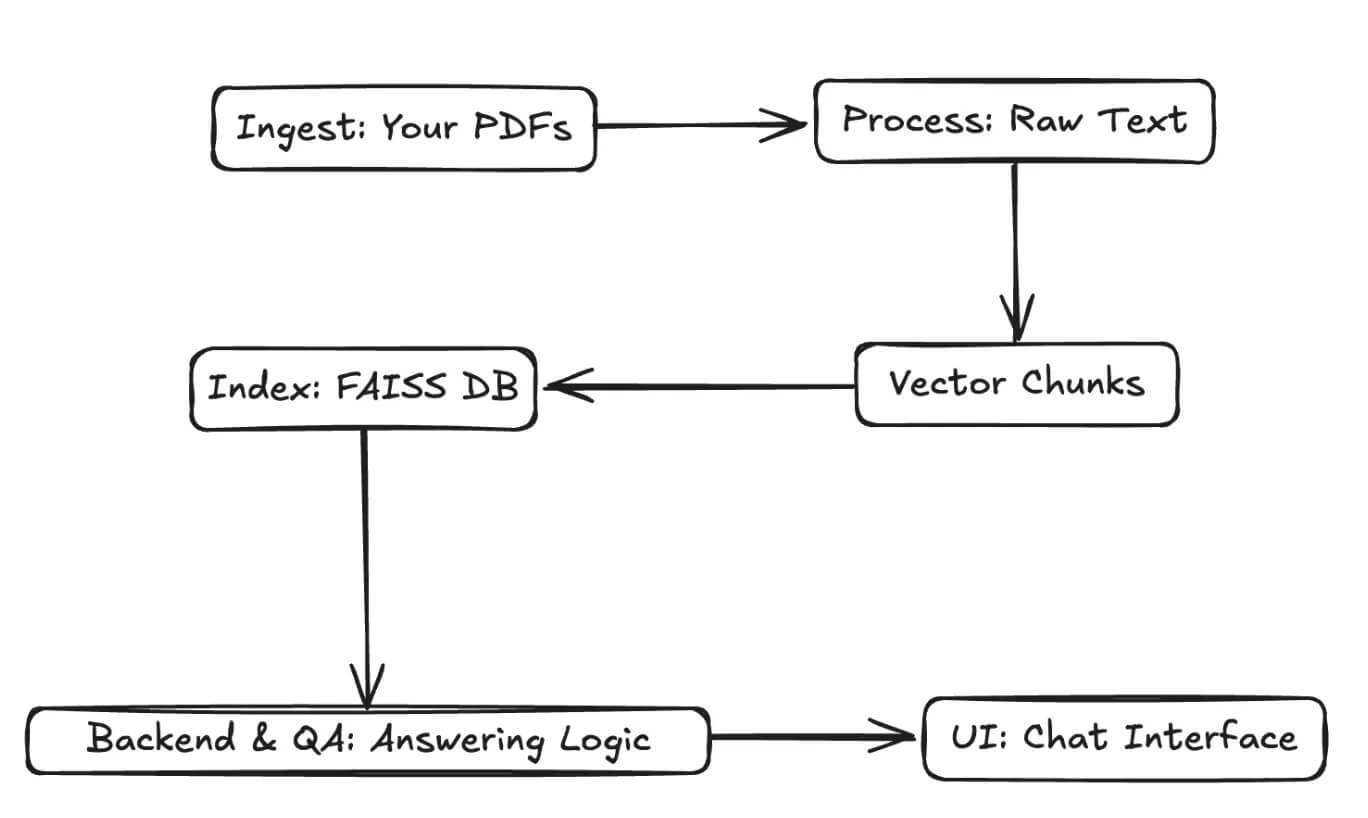

RAG, on the other hand, is designed to help us reach external data before generating an answer. To do that, it first splits the dataset into chunks and stores it in a vector database, and uses the context to answer your questions.

While some setups connect RAG to live web search, it’s most commonly used with the documents these days.

Rag Schema

Final Thoughts

Thanks for reading this one. I know more terms, but I don’t want to spend time on an idea that would not validate. So, if you like it, please send claps and comments to let me know, and I'll continue writing about it.

For instance, do you know the jailbreak prompts that made ChatGPT answer the questions it usually would not answer?

If you want me to send the AI Builders Playbook, subscribe to me on my Substack here.

“Machine learning is the last invention that humanity will ever need to make.” Nick Bostrom

📧 Stay Updated with AI Insights

Join 10,000+ subscribers getting the latest AI, Data Science, and tech insights delivered to your inbox.

💡 No spam, unsubscribe anytime. We respect your privacy.